Well, a couple of weeks have passed and it’s time to get back to blogging. And for this week, here is the Alexa post that I mentioned a little while ago, back in December last year.

First, to anticipate a later part of this post, is the extract of Alexa reciting the first few lines of Wordsworth’s Daffodils…

It has been a busy time for Alexa generally – Amazon have extended sales of various of the hardware gizmos to many other countries. That’s well and good for everyone: the bonus for us developers is that they have also extended the range of countries into which custom skills can be deployed. Sometimes with these expansions Amazon helpfully does a direct port to the new locale, and other times it’s up to the developer to do this by hand. So when skills appeared in India, everything I had done to that date was copied across automatically, without me having to do my own duplication of code. From Monday Jan 8th the process of generating default versions for Australia and New Zealand will begin. And Canada is also now in view. Of course, that still leaves plenty of future catch-up work, firstly making sure that their transfer process worked OK, and secondly filling in the gaps for combinations of locale and skill which didn’t get done. The full list of languages and countries to which skills can be deployed is now

- English (UK)

- English (US)

- English (Canada)

- English (Australia / New Zealand)

- English (India)

- German

- Japanese

Based on progress so far, Amazon will simply continue extending this to other combinations over time. I suspect that French Canadian will be quite high on their list, and probably other European languages – for example Spanish would give a very good international reach into Latin America. Hindi would be a good choice, and Chinese too, presupposing that Amazon start to market Alexa devices there. Currently an existing Echo or Dot will work in China if hooked up to a network, but so far as I know the gadgets are not on sale there – instead several Chinese firms have begun producing their own equivalents. Of course, there’s nothing to stop someone in another country accessing the skill in one or other of the above languages – for example a Dutch person might consider using either the English (UK) or German option.

To date I have not attempted porting any skills in German or Japanese, essentially through lack of necessary language skills. But all of the various English variants are comparatively easy to adapt to, with an interesting twist that I’ll get to later.

So my latest skill out of the stable, so to speak, is Wordsworth Facts. It has two parts – a small list of facts about the life of William Wordsworth, his family, and some of his colleagues, and also some narrated portions from his poems. Both sections will increase over time as I add to them. It was interesting, and a measure of how text-to-speech technology is improving all the time, to see how few tweaks were necessary to get Alexa to read these extract tolerably well. Reading poetry is harder than reading prose, and I was expecting difficulties. The choice of Wordsworth helped here, as his poetry is very like prose (indeed, he was criticised for this at the time). As things turned out, in this case some additional punctuation was needed to get these sounding reasonably good, but that was all. Unlike some of the previous reading portions I have done, there was no need to tinker with phonetic alphabets to get words sounding right. It certainly helps not to have ancient Egyptian, Canaanite, or futuristic names in the mix!

And this brings me to one of the twists in the internationalisation of skills. The same letter can sound rather different in different versions of English when used in a word – you say tomehto and I say tomarto, and all that. And I necessarily have to dive into custom pronunciations of proper names of characters and such like – Damariel gets a bit messed up, and even Mitnash, which I had assumed would be easily interpreted, gets mangled. So part of the checking process will be to make sure that where I have used a custom phonetic version of someone’s name, it comes out right.

Wordsworth Facts is live across all of the English variants listed above – just search in your local Amazon store in the Alexa Skills section by name (or to see all my skills to date, search for “DataScenes Development“, which is the identity I use for coding purposes. If you’re looking at the UK Alexa Skills store, this is the link.

The next skill I am planning to go live with, probably in the next couple of weeks, is Polly Reads. Those who read this blog regularly – or indeed the Before The Second Sleep blog (see this link, or this, or this) – may well think of Polly as Alexa’s big sister. Polly can use multiple different voices and languages rather than a fixed one, though Polly is focused on generating spoken speech rather than interpreting what a user might be saying (the module in Amazon’s suite that does the comprehension bit is called Lex). So Polly Reads is a compendium of all the various book readings I have set up using Polly, onto which I’ll add a few of my own author readings where I haven’t yet set Polly up with the necessary text and voice combinations. The skill is kind of like a playlist, or maybe a podcast, and naturally my plan is to extend the set of readings over time. More news of that will be posted before the end of the month, all being well.

The process exposed a couple of areas where I would really like Amazon to enhance the audio capabilities of Alexa. The first was when using the built-in ability to access music (ie not my own custom skill). Compared to a lot of Alexa interaction, this feels very clunky – there is no easy way to narrow in on a particular band, for example – “The band is Dutch and they play prog rock but I can’t remember the name” could credibly come up with Kayak, but doesn’t. There’s no search facility built in to the music service. And you have to get the track name pretty much dead on – “Alexa, Play The Last Farewell by Billy Boyd” gets you nowhere except for a “I can’t find that” message, since it is called “The Last Goodbye“. A bit more contextual searching would be good. Basically, this boils down to a shortfall in what technically we call context, and what in a person would be short-term memory – the coder of a skill has to decide exactly what snippets of information to remember from the interaction so far – anything which is not explicitly remembered, will be discarded.

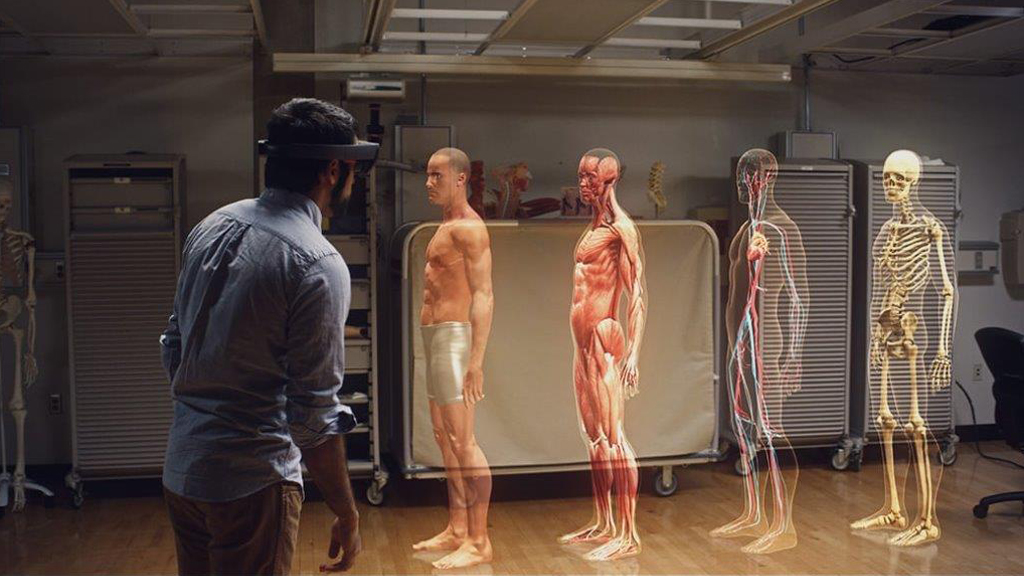

That was a user-moan. The second is more of a developer-moan. Playing audio tracks of more than a few seconds – like a book extract, or a decent length piece of music – involves transferring control from your own skill to Alexa, who then manages the sequencing of tracks and all that. That’s all very well, and I understand the purpose behind it, but it also means that you have lost some control over the presentation of the skill as the various tracks play. For example, on the new Echo Show (the one with the screen) you cannot interleave the tracks with relevant pictures – like a book cover, for example. Basically the two bits of capability don’t work very well together. Of course all these things are very new, but it would be great to see some better integration between the different pieces of the jigsaw. Hopefully this will be improved with time…

That’s it for now – back to reading and writing..