Let’s be clear right at the start – this is not a blame-the-computer post so much as a blame-the-programmer one! It is all too easy, these days, to blame the device for one’s ills, when in actual fact most of the time the problem should be directed towards those who coded the system. One day – maybe one day quite soon – it might be reasonable to blame the computer, but we’re not nearly at that stage yet.

So this post began life with frustration caused by one of the several apps we use at work. The organisation in question, which shall remain nameless, recently updated their app, no doubt for reasons which seemed good to them. The net result is that the app is now much slower and more clunky than it was. A simple query, such as you need to do when a guest arrives, is now a ponderous and unreliable operation, often needing to be repeated a couple of times before it works properly.

Now, having not so long ago been professionally involved with software testing, this started me thinking. What had gone wrong? How could a bunch of (most likely) very capable programmers have produced an app which – from a user’s perspective – was so obviously a step backwards?

Of course I don’t know the real answer to that, but my guess is that the guys and girls working on this upgrade never once did what I have to do most days – stand in front of someone who has just arrived, after (possibly) a long and difficult journey, using a mobile network connection which is slow or lacking in strength. In those circumstances, you really want the software to just work, straight away. I suspect the team just ran a bunch of tests inside their superfast corporate network, ticked a bunch of boxes, and shipped the result.

Now, that’s just one example of this problem. We all rely very heavily on software these days – in computers, phones, cars, or wherever – and we’ve become very sophisticated in what we want and don’t want. Speed is important to us – I read recently that every additional second that a web page takes to load loses a considerable fraction of the potential audience. Allegedly, 40% of people give up on a page if it takes longer than 3 seconds to load, and Amazon reckon that slow down in page loading of just one second costs the sales equivalent of $1.6 billion per year. Sainsbury’s ought to have read that article… their shopping web app is lamentably slow. But as well as speed, we want the functionality to just work. We get frustrated if the app we’re using freezes, crashes, loses changes we’ve made, and so on.

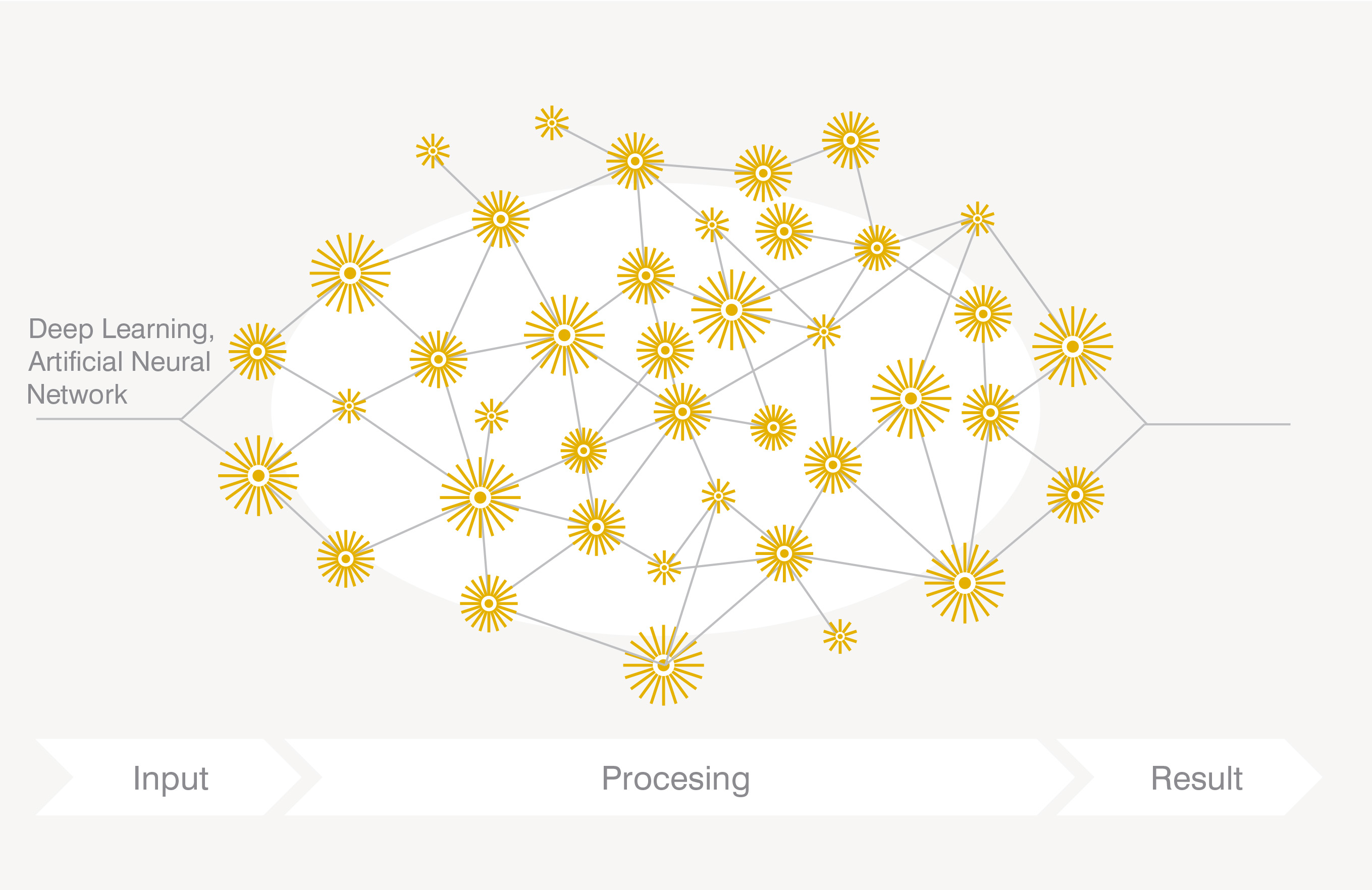

What has this to do with writing? Well, my science fiction is set in the near future, and it’s a fair bet that many of the problems that afflict software today will still afflict it in a few decades. And the situation is blurred by my assumption that AI systems wil have advanced to the point where genuinely intelligent individuals (“personas”) exist and interact with humans. In this case, “blame-the-computer” might come back into fashion. Right now, with the imminent advent of self-driving cars on our roads, we have a whole raft of social, ethical, and legal problems emerging about responsibility for problems caused. The software used is intelligent in the limited sense of doing lots of pattern recognition, and combining multiple different sources of data to arrive at a decision, but is not in any sense self-aware. The coding team is responsible, and can in principle unravel any decision taken, and trace it back to triggers based on inputs into their code.

As and when personas come along, things will change. Whoever writes the template code for a persona will provide simply a starting point, and just as humans vary according to both nature and nurture, so will personas. As my various stories unfold, I introduce several “generations” of personas – major upgrades of the platform with distinctive traits and characteristics. But within each generation, individual personas can differ pretty much in the same way that individual people do. What will this mean for our present ability to blame the computer? I suppose it becomes pretty much the same as what happens with other people – when someone does something wrong, we try to disentangle nature from nurture, and decide where responsibility really lies.

Meanwhile, for a bit of fun, here’s a YouTube speculation, “If HAL-9000 was Alexa”…