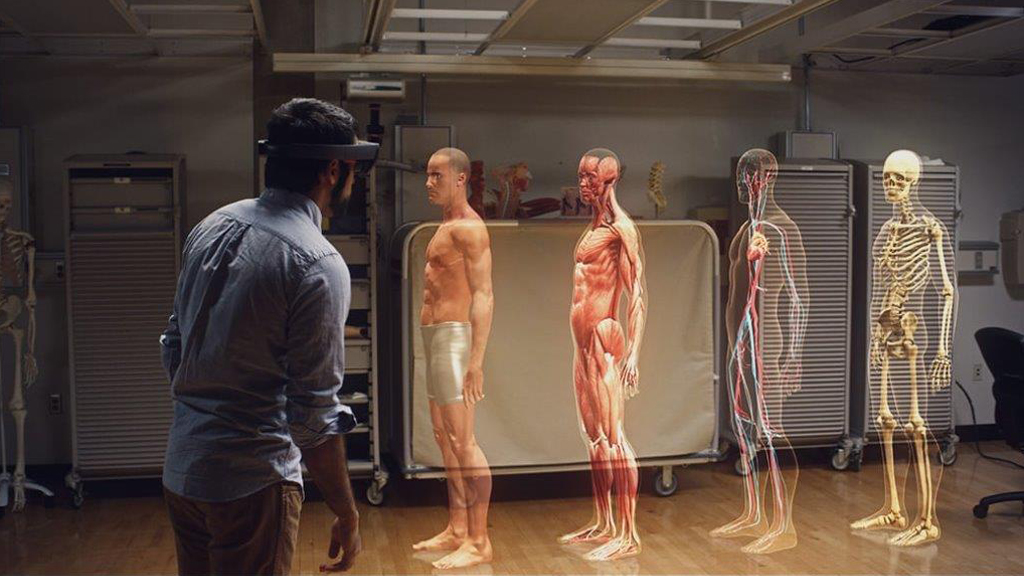

I was at the annual Amazon technical summit here in London last week, and today’s blog post is based on something I heard one of the presenters say. On the whole it was a day of consolidating things already developed, rather than a day of grand new breakthroughs, and I enjoyed myself hearing about enhancements to voice and natural language services, together with an offbeat session on building virtual 3d worlds.

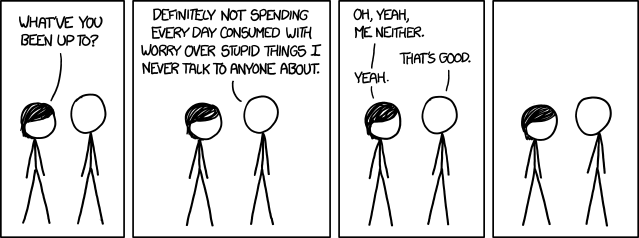

But I want to focus on one specific idea, contrasting how we build human-computer interfaces quite differently for the eye and the ear. In short, “the eye prefers repetition, the ear prefers variety“. Look at the appearance of your typical app on computer or phone. We have largely standardised where the key elements go – menu, options, title and so on. They are so standardised that we can tell at a glance if something is “in the wrong place“. The text stays the same every time you open it. The icons stay the same, unless they have a little overlay telling you to do something with them. And so on.

Now in the middle of a technical session I just let that statement drift by, but it stuck with me afterwards, and I kept turning it over. Hence this post. At face value it seemed a bit odd – our eyes are constantly bombarded with hugely diverse information from the world around us. But then I started thinking some more. It’s not just to do with the light falling into our eyes, or the biology of how our visual receptors handle that – our image of the world is the end result of a very complex series of processing steps inside our nervous system.

A child’s picture of a face, or a person, is instantly recognisable as such, even though reduced to a few schematic shapes. A sketch artist will make a few straight lines and a curve, and we know we are looking at a house beside a beach, even though there are no colours or textures to help us. The animal kingdom shows us the same thing. Show a toad a horizontal line moving sideways, and it reacts as though it was a worm. Turn the line vertical and move it in the same way, and the toad ignores it (see this Wikipedia article or this video for details). Arrange a dark circle over a mouse and increase its size, and it reacts with fear and aggression, as though something was looming over it (see this article, in the section headed Visual threat cues).

It’s not difficult to see why – if you think you might be somebody’s prey, you react to the first sign of the predator. If you’re wrong, all you’ve lost is some time and adrenalin. If you ignore the first signs and you’re wrong, it’s game over!

So it makes sense that our visual sense, including nervous system as well as eyes, reduces the world to a few key features. We skim over fine detail at first glance, and only really notice it when we need to – when we deliberately turn our attention to it.

Also,there’s something to be learned from how light and sound work differently for us. At a very fundamental level, light adds up to give a single composite result. We mix red and yellow paint to give orange, or red and green light on a computer screen to give yellow. The colour tints, or the light waves, add up to make a single average colour. Not so with sound. Play the note middle C on a keyboard, then start playing the G above it. You end up with a chord – you don’t end up with a single note which is a blend of the two. So adding visual signals, and adding audible ones, give completely different effects.

Finally, the range of what we can perceive is entirely different. The most extreme violet light that we can see has about twice the frequency of the most extreme red. Doubling frequency gives us an octave change, so that means we can see one octave of visible light out of the entire spectrum. But a keen listener under ideal circumstances can hear a range of seven or eight octaves of sound, from about 12 Hz to nearly 30kHz. Some creatures do a bit better than us in both light and sound detection, but the basic message is the same – we hear a much more varied spectrum than we see.

Now, the technical message behind that speaker’s statement related to Alexa skills. To retain a user’s interest, the skill has to not sound the same every time. The eye prefers repetition, so our phone apps look the same each time we start them. But the ear prefers variety, so our voice skills have to mirror that, and say something a little bit different each time.

I wonder how that applies to writing?