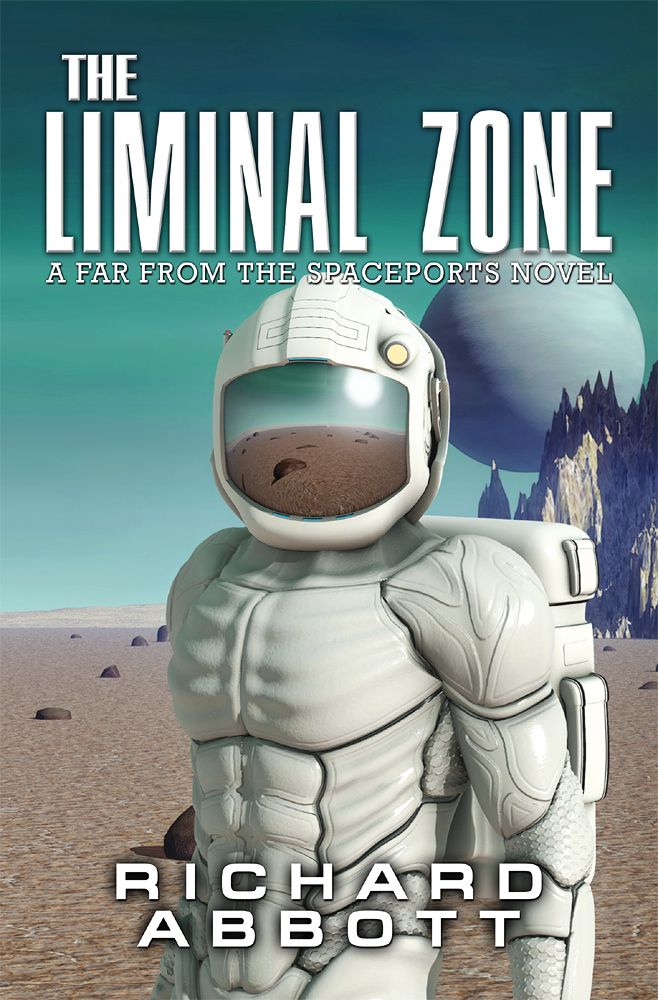

Before starting this blog post properly, I should mention that my latest novel in the Far from the Spaceports series – called The Liminal Zone – is now on pre-prder at Amazon in kindle format. The link is https://www.amazon.co.uk/gp/product/B087JP2GJP. Release date is May 17th. For those who prefer paperback, that version is in the later stages of preparation and will be ready shortly. For those who haven’t been following my occasional posts, it’s set about twenty or so years on from the original book, out on Pluto’s moon Charon, and has a lot more to do with first extraterrestrial contact than financial crime!

Back to this week’s post, and as a break from the potential for life on exoplanets, I thought I’d write about AI and its (current) lack of common sense. AI individuals – called personas – play a big role in my science fiction novels, and I have worked on and off with software AI for quite a few years now. So I am well aware that the kind of awareness and sensitivity which my fictional personas display, is vastly different from current capabilities. But then, I am writing about events set somewhere in the next 50-100 years, and I am confident that by that time, AI will have advanced to the point that personas are credible. I am not nearly so sure that within the next century we’ll have habitable bases in the asteroid belt, let alone on Charon, but that’s another story.

What are some of the limitations we face today? Well, all of the best-known AI devices, for all that they are streets ahead of what had a decade ago, are extremely limited in their capacity to have a real conversation. Some of this is context, and some is common sense (and some other factors that I’m not going to talk about today).

Context is the ability that a human conversation partner has to fill in gaps in what you are saying. For example, if I say “When did England last win the Ashes?“, you may or may not know the answer, but you’d probably realise that I was talking about a cricket match, and (maybe with some help from a well-known search engine) be able to tell me. If I then say “And where were they playing?“, you have no difficulty in realising that “they” still means England, and the whole question relates to that Ashes match. You are holding that context in your mind, even if we’ve chatted about other stuff in the meantime, like “what sort of tea would you like?” or “will it rain tomorrow?“. I could go on to other things, like “Who scored most runs?” or “Was anybody run out?” and you’d still follow what I was talking about.

I just tried this out with Alexa. “When did England last win the Ashes?” does get an answer, but not to the right question – instead I learned when the next Ashes was to be played. A bit of probing got me the answer to who won the last such match (in fact a draw, which was correctly explained)… but only if I asked the question in fairly quick succession after the first one. If I let some time go by before asking “Where were they playing?“, what I get is “Hmmm, I don’t know that one“. Alexa loses the context very quickly. Now, as an Alexa developer I know exactly why this is – the first question opens up the start of a session, during which some context is carefully preserved by the development team deciding what information is going to be repeatedly passed to and fro as Alexa and I exchange comments. During that session, further questions within the defined context can be handled. Once the session closes, the contextual information is discarded. (If I was a privacy campaigner, I’d be very pleased that it was discarded, but as a keen AI enthusiast I’m rather disappointed). With the Alexa skills that I have written (and you can find them on the Alexa store on Amazon by searching for DataScenes Development), I try to keep the fiction of conversation going by retaining a decent amount of context, but it is all very focused on one thing. If you’re using my Martian Weather skill and then assume you can start asking about Cumbrian Weather, on the basis that they are both about weather, then Alexa won’t give you a sensible answer. It doesn’t take long at all to get Alexa in a spin – for some humour about this, check out this YouTube link – https://www.youtube.com/watch?v=JepKVUym9Fg…

So context is one thing, but common sense is another. Common sense is the ability to tap into a broad understanding of how things work, in order to fill in what would otherwise be gaps. It allows you to make reasonable decisions in the face of uncertainty or ambiguity. For example, if I say “a man went into a bar. He ordered fish and chips. When he left, he gave the staff a large tip“, and then say “what did he eat?“, common sense will tell you that he most likely ate fish and chips. Strictly speaking, you don’t know that – he might have ordered it for someone else. It might have arrived at his table on the outdoor terrace but was stolen by a passing jackdaw. In the most strict logical sense, I haven’t given you enough information to say for sure, and you can concoct all kinds of scenarios where weird things happened and he did not, in fact, eat fish and chips… but the simplest guess, and the most likely one that you’d guess, is that is what he did.

In passing, Robert Heinlein, in his very long novel Stranger in a Strange Land, assumed the existence of people whose memory, and whose capacity for not making assumptions, meant that they could serve in courts of law as “fair witnesses”, describing only and exactly what they had seen. So if asked what colour a house was, they would answer something like “the house was white on the side facing me” – with no assumption about the other sides. All very well for legal matters, but I suspect the conversation would get boring quite quickly if they carried that over into personal life. They would run out of friends before long…

Now, what is an AI system to do? How do we code common sense into artificial intelligence, which by definition has not had any kind of birth and maturation process parallel to a human one (there probably has been a period of training in a specific subject). By and large, we learn common sense (or in some people’s case, don’t learn it) by watching how those around us do things – family, friends, school, peers, pop stars or sports people. And so on. We pick up, without ever really trying to, what kinds of things are most likely to have happened, and how people are likely to have reacted, But a formalised way of imparting common sense has eluded AI researchers for over fifty years now. There have been attempts to reduce common sense to a long catalogue of “if this then that” statements, but there are so many special cases and contradictions that these attempts have got bogged down. There have been attempts to assign probabilities of particular individual outcomes, so that a machine system trying to find its way through a complex decision, would try to identify what was the most likely thing to do in some kind of combination problem. To date, none have really worked, and encoding common sense into AI remains a challenging problem. We have AI software which can win Go and other games, but cannot then go on to hold an interesting conversation about other topics.

All of which is of great interest to me as author – if I am going to make AI personas appear capable of operating as working partners and as friends to people, they have to be a lot more convincing than Alexa or any of her present-day cousins. Awareness of context and common sense goes a long way towards achieving this, and hopefully, the personas of Far from the Spaceports, and the following novels through to The Liminal Zone, are convincing in this way.